These days, everyone is talking about GANs like BigGAN and StyleGAN, and their remarkable and diverse results on massive image datasets. Yeah, the results are pretty cool! However, this has led to a decline in the research of other generative models like Autoregressive models and Variational Autoencoders. So today, we are going to understand one of these unnoticed generative models: MADE.

Generative models are a big part of deep unsupervised learning. They are of two types—Explicit models, in which we can explicitly define the form of the data distribution, and Implicit models, in which we cannot explicitly define the density of data. MADE is an example of a tractable density estimation model in explicit models. Its aim is to estimate a distribution from a set of examples.

The model masks the autoencoder’s parameters to impose autoregressive constraints: each input can only be reconstructed from previous inputs in a given ordering. Autoencoder outputs can be interpreted as a set of conditional probabilities, and their product, the full joint probability.

Autoregression is a time series model that uses observations from previous time steps as input to a regression equation to predict the value at the next time-step.

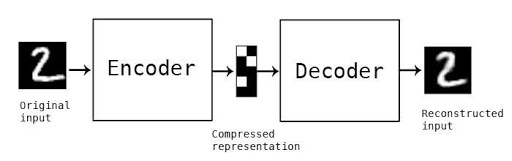

Autoencoders

Autoencoder is an unsupervised neural network that learns how to compress and encode data efficiently then learns how to reconstruct the data back from the reduced encoded representation to a representation that is as close to the original input as possible.

Autoencoder for MNIST

Lower dimensional latent representation has lesser noise than input and contains essential information of the input image. So the information can be used to generate an image that is different from the input image but within the input data distribution. By computing dimensionally reduced latent representation z, we are ensuring that the model is not reconstructing the same input image.

Now we want to impose some property on autoencoders, such that its output can be used to obtain valid probabilities. By using autoregressive property, we can transform the traditional autoencoder approach into a fully probabilistic model.

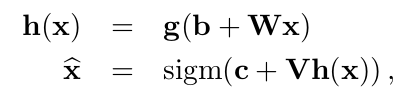

Where W and V are matrices, b and c are vectors, g is a non-linear activation function and sigm is a sigmoid function.

Cross-entropy loss of above autoencoder is,

We can treat ![]() as the model’s probability that

as the model’s probability that ![]() is 1, so l(x) can be understood as a negative log-likelihood function. Now the autoencoder can be trained using a gradient descent optimization algorithm to get optimal parameters (W, V, b, c) and to estimate data distribution. But the loss function isn’t actually a proper log-likelihood function. The implied data distribution

is 1, so l(x) can be understood as a negative log-likelihood function. Now the autoencoder can be trained using a gradient descent optimization algorithm to get optimal parameters (W, V, b, c) and to estimate data distribution. But the loss function isn’t actually a proper log-likelihood function. The implied data distribution![]() isn’t normalized

isn’t normalized![]() . So outputs of the autoencoder can not be used to estimate density.

. So outputs of the autoencoder can not be used to estimate density.

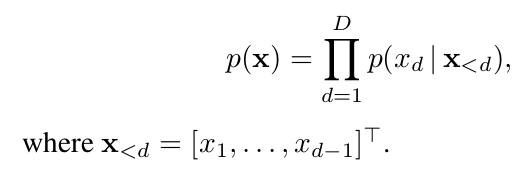

Distribution Estimation as Autoregression

Now we want to impose some property on autoencoders, such that its output can be used to obtain valid probabilities. By using autoregressive property, we can transform the traditional autoencoder approach into a fully probabilistic model.

We can write joint probability as a product of their conditional probabilities by chain rule,

Masked Autoencoders

So we want to discard the connection between these units by element-wise multiplying each weight matrix by a binary mask matrix, whose entries that are set to ‘0’ correspond to the connections we wish to remove.

Where MW and MV are mask matrices of the same dimension as W and V respectively, now we want to design these masks in a way such that they satisfy the autoregressive property.

To impose the autoregressive property, we first assign each unit in the hidden layer an integer m between 1 and D-1 inclusively. The kth hidden unit’s number m(k) represents the maximum number of input units to which it can be connected. Here values 0 and D are excluded because m(k)=0 means it is a constant hidden unit, and m(k)=D means it can be connected to maximum D input units, so both the conditions violate the autoregressive property.

There are few things to notice in the above figure,

- Input 3 is not connected to any hidden unit because no output node shouldn’t depend on it.

- Output 1 is not connected to any previous hidden unit, and it is estimated from only the bias node.

- If you trace back from output to input units, you can clearly see that autoregressive property is maintained.

Let’s consider Multi-layer perceptron with L hidden layers,

The constraints on the maximum number of inputs to each hidden unit are encoded in the matrix masking the connections between the input and hidden units:

And these constraints are encoded on output mask matrix:

We set ml(k) for every layer![]() by sampling from a discrete uniform distribution defined on integers from mink’ ml-1(k’) to D-1 whereas m0 is obtained by randomly permuting the ordered vector [1,2,…,D].

by sampling from a discrete uniform distribution defined on integers from mink’ ml-1(k’) to D-1 whereas m0 is obtained by randomly permuting the ordered vector [1,2,…,D].

MV,W = MVMW1MW2…MWL represents the connectivity between inputs and outputs. Thus to demonstrate the autoregressive property, we need to show that MV,W is strictly lower diagonal, i.e. MV,Wd’,d is 0 if d'<=d.

Let’s look at an algorithm to implement MADE:

Pseudocode of MADE

Deep NADE models require D feed-forward passes through the network to evaluate the probability p(x) of a D-dimensional test vector, but MADE only requires one pass through the autoencoder.

Inference

Essentially, the paper was written to estimate the distribution of the input data. The inference wasn’t explicitly mentioned in the paper. It turns out it’s quite easy, but a bit slow. The main idea (for binary data) is as follows:

- Randomly generate vector x, set i=1

- Feed x into autoencoder and generate outputs

for the network, set p =

for the network, set p = .

. - Sample from a Bernoulli distribution with parameter p, set input xi = Bernoulli(p).

- Increment i and repeat steps 2-4 until i > D.

The inference in MADE is very slow, it isn’t an issue at training because we know all x<d to predict the probability at dth dimension. But at inference, we have to predict them one by one, without any parallelization

Left: Samples from a 2 hidden layer MADE. Right: Nearest neighbour in binarized MNIST.

Though MADE can generate recognizable 28X28X1 images on MNIST dataset, it is computationally expensive to generate high dimensional images from a large dataset.

Conclusion

MADE is a straightforward yet efficient approach to estimate probability distribution from a single pass through an autoencoder. It is not capable of generating comparably good images as that of state-of-the-art techniques (GANs), but it has built a very strong base for tractable density estimation models such as PixelRNN/PixelCNN and Wavenet. Nowadays, Autoregressive models are not used in the generation of images and it is one of the less explored areas in generative models. Nevertheless, its simplicity makes room for the advancement of research in this field.