The following document records the working and insights of a R&D department of an Electrical Instrumentation Manufacturing company, born and bought-up in India in early 1980s, and eventually extending its roots over 70+ countries and sustainably competing in European, Middle-East, Russian, South-East Asian, American and Latin American, and of course Indian Markets.

From advanced Multifunction Meters to legacy Analog Panel Meters, from handheld Multimeters to patented Clamp Meters, from Digital Panel Meters to Temperature Controller, from 10 kV Digital Insulation Testers to 30 kW Solar Inverters, from Current transformers to Genset Controllers, from Power Factor Controller to Power Quality Analyzers, from Batter Chargers to Transducers. From making best-selling products to white labeling for German, American, Polish and UK’s tech giants. From being major supplier of measuring instruments for BHEL, Railways, NTPC, and big and small manufacturing facilities in India to be able to send its devices in SpaceX rockets. This is not a description of a company located in a tech savvy Silicon Valley of most superior nation of world. This is description of just one of many such growing companies in far obscure industrial regions of our Indian sub-continent.

Purpose of this accounting:

- To introduce and highlight the major working, thinking and organizing methods of a world that awaits the footsteps of the hopeful graduates out from a relatively cozy boundaries of their college campus.

- To produce a testimony of fact that in exact same environment, with exact same people backed by exact same education system, with same so called incompetent Indian working class a company not just leads a product-based market but also beat it’s so called advanced European counter-parts and bring in collective consciousness the descriptions that seriously challenges the conventional assumption of ailing Indian Manufacturing Industry.

- To reinforce and bear witness to the fact that the truths and advices we all get to hear from people around us, are not mere variation of pressure in air but if followed with true spirit it literally creates magic and destined to get one to a point of breath taking, heart pounding and soul touching experiences.

Work on Solutions Not on Problems

The key spirit of professional execution is logical optimistic solution finding approach. The problems in front of all of us are all equally compelling and evident, absolutely no doubt in that, resources are limited, time is little, skills are moderate, support is not there, etc. But the point is the R&D mindset will never except them. If resources are not there let’s check-out the savings/loan, if time is not there let’s think about multiplexing, if skill is not there let’s talk to an expert and reach out for help, if support is not there let’s start reading ourselves. With optimism you ask, What exactly is the problem and what needs to be done to counter it, you present yourselves with options select one with maximum logical connections and go do it. If failed, with same optimism you ask that same question again. If you take decision with logical grounded thinking every time you almost hit the solution and in that is the drive for next try.

For example, in our setup, even on just one section of product (let’s say LCD) as many as 24 revisions are made until you uncover the design that has best readability with maximum features considering the space limitations of mechanical housing and even at that point the owner of design will not say no to 25th revision if that’s better than 24th. And here the catch is when someone starts, he can just say no, it is not possible to accommodate all these things on such a small screen, either readability or extra features can be provided, logically that’s correct until someone comes with an optimistic solution finding approach and say let’s first accommodate the unavoidable one, then let’s try some alignments, let’s try some tilts, let’s try some symbols, lets try some overlapping.

An striking example of human genius, the size of screen is less than a little finger of a 5-year old, but is capable to display great deal of data on it.

Doing Detailed and Exhaustive Documentation

It is well accepted and proven thing that if we work well with the documents at office, one is destined to have peaceful life at home, as one does not have to remember any dumb piece of information. You have a flawless access to a time machine kind of thing. A window to look your past works and track back any spurious design back to its origin in a very less time and less frustrating way. Without documentation at any point the situation which appears to perfectly under control can turn into a knife in windpipe type of jacked-up mess. So, maintaining organized folders, Read_Me files with time stamps and quick notes is indispensable.

Organization of Big and Small Things

Organization of assets and swift and flawless access to our resources always help us to do the mundane things in a highly efficient manner. Think about it you are working on your dream project and the time when you got a breakthrough idea in your work you spend next two hours searching the resistor in the plethora of mess you created and never finding it out and in a snip the time you got to try out that idea is gone. Life is fast for all of us, so being ever ready handy with our tools and hacks is always advantageous.

From the 15K Solder gun to a 1 Rupee pin that you may use to temporarily replace the fallen button on your shirt, everything shall be at its designated place. In such degree of organization of everything around us, one feels that readiness and calm to make it through all those massive problems that all of us have.

Organization of assets just not saves time, money and energy but always creates a welcoming environment to get into. And whichever phase of our lives we may be in a high-school student, a college grad or a professional, we can never isolate our work life from the usual personal life that has to go alongside. One may get ill, one may have unsettled debates with parents, one may have problems with food, water, home, discomfort with neighbors, heavy traffic and extra chilled office spaces, etc., all that non-sense that always plagues us, are anyways an inseparable part of life. The things that walk you through is that the highest degree of organization of small things, big assets and of course thoughts in the head.

Choice is at Last Always Ours!

There comes a time when we all get stuck. Some gets resolved after few hours or debugging some stretches over a day, and some extend up to a working week. Rare are those problems that walk along your side for over a month’s duration. If someone is sufficiently in line with ongoing then most of time our divine intuition lets us get to the root of issues in one or two shots.

You found that EEPROM isn’t responding, you take out the datasheet verifies for the connections, check out the supplies found a cap dry, gave a magic touch with your gun and boom the EEPROM rocks.

You found that device is not measuring the current, you took out the circuit and assembly diagrams, verify the components, found all good. Took out the DSO and plug it across the shunts and find that the resistor is burnt. Replace it with new and, boom that’s fixed.

So, every time you take help of logical reasoning of what has to happen to make that happen and that pretty much shows the light. Eliminating one by one the most obvious reasons for the problems. This doesn’t take courage, but the fun starts to fade out as we run out of logical possibilities. It is from here the test of gut starts. When all logical traces have been checked, everything is just as expected to be, except the final output.

In those moments of defeat and dead-ends one gets subjected to an entirely new dimension of thinking which causes a serious humbling effect on professional’s character. When you look back at those time of intense desperations and using your most forceful impacts and still not hitting the thing, the only thing that comes from within is great calm and respect for the nature of reality for being whatever it is.

How would you handle a situation in which accidently a plugin slot gets locked by you in 5 Lakh high priority high-use equipment?

How would you handle the situation in which after months of workings you are just about to shoot for the hand-over of a product to the production and QA teams suddenly reports to you the most dreaded failure of your product, which is expected to drive a long process of iterative tuning?

How would you handle the situation in which you checked, double-checked, triple-checked and still an error made it into your product’s datasheet?

These types of situations lead to increase in speed of blood in veins, ringing in head, and absolute blow to our spirit and whatnot. But even in that chaos things really moves based on the choices we make. One can accept that truth as it is and chose to question what needs to be done and just take that one next step to address it or accept feeling desolated, beaten, and slapped by life like anything.

Choice is ours!

Try out these fundamental methods of organization, thinking and working, and get astounded by the power of it.

Conclusion

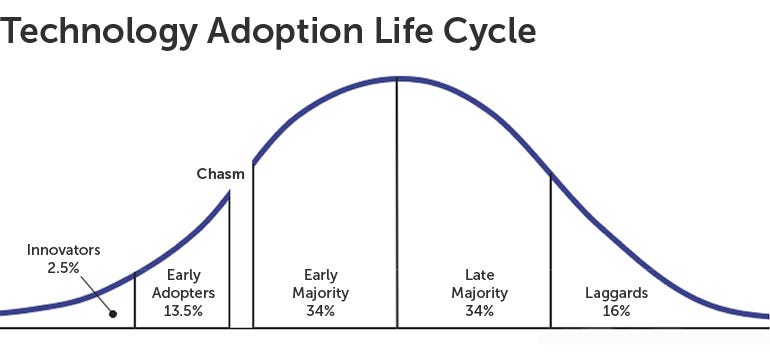

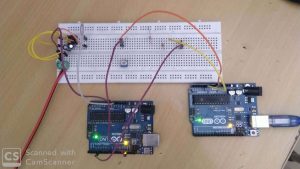

The sudden adoption of Western Education System inspired course structuring in Indian Education System has opened up a humongous range of possibilities for young new graduates. Few students find this ideal for their exploring journey, were as many struggle to chose what to pick from the such a large plate of options. The student needs to anticipate the common and advanced skills in their field of liking. Getting the intuition behind the theory, enabling oneself with mathematical tools and methods, getting comfortable with open source environments, getting hands fluent in hardware handling, ability to do documentation and working in an organized and structured manner, all these set of skills proves to be an asset for every team member during the product development.

The IP rights are conserved, names of companies and writer remains anonymous.