Introduction

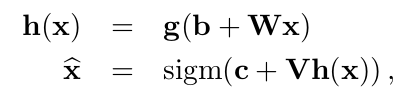

Let

us talk about one of the most heated topics of current Indian politics in the

most unpolitical way possible: the Farmer bills 2020. As you will go through

this blog, you will know about the pros, cons and other aspects of the bills

that the government claims to revolutionize the Indian agricultural sector. On

diving deeper, one can realize that the bills are way more than just having

pros and cons. Sometimes things look pretty on paper but not in real–world and vice versa. Let

us first understand the current system of Indian agriculture: the Mandi System.

The APMC and MSP

In the present system, there are two ways the farmers can sell their crops in the market. The first way is through the Agricultural Produce Market Committee (APMC) managed by the licensed traders and regulated by state governments. The sole purpose behind the establishment of APMCs was to prevent the farmers from exploitation by the lenders, landlords and retailers. Consisting of licensed traders who are supposed to buy the crops after auctioning, APMC makes sure that the farmers receive the best price for their produce. These traders would later sell it to retailers with a significant margin. The APMC acts like a middleman between the farmers and the retailers and earns good profits. As all these trades are taxed, it is one of the major revenue sources for state governments. But eventually, these corrupt middlemen started forming cartels and buying the crops after mutually deciding a price way lower than before, keeping major portions of margin in their own pocket, leading to the exploitation of farmers, thus defying the very purpose they were supposed to work for.

But there still exists a ray of hope sparkled by the government. It provides farmers a second option: the MSP or the Minimum Support Price, the price at which the government assures to buy farmers’ produce if the APMC traders are not reasonable.

The Revolutionized Market

Enough of the old, outdated systems. Let us bring some modernization here. Imagine if, in the place of old messed up APMC Mandis, there are huge storage tanks and warehouses owned by private companies storing high-quality yield of commodities produced by the most high-tech and modernized systems of cultivating and being sold in supermarkets instead of the untidy sabzi mandis. The farmers are finally earning deserved profits thanks to the elimination of APMC mediators. We are getting great deals on foods everywhere in the country, thanks to the high-tech storage facility and no wastage. It seems great, right? Everyone is happy. This is the moment when we stop imagining and start thinking, the part that the government seems to forget every time. But before that, let us first have a very brief understanding of the ordinances passed:

The Farmers’ Produce Trade and Commerce (Promotion and Facilitation) Bill 2020: allows intra-state and inter-state trade of farmers’ produce beyond the physical premises of APMC markets.

Farmers (Empowerment and Protection) Agreement of Price Assurance and Farm Services Bill, 2020: creates a framework for contract farming through an agreement between a farmer and a buyer prior to the production or rearing of any farm produce.

The Essential Commodities (Amendment) Bill, 2020: allows the central government to regulate the supply of certain food items only under extraordinary circumstances (such as war and famine). Stock limits may be imposed on agricultural produce only if there is a steep price rise..

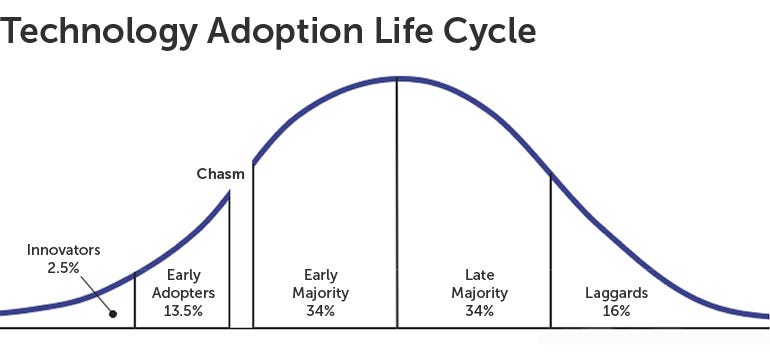

Do you see any flaws in this? It seems like even if you and I go out there and start farming, it might not be that much of an issue. But here we are talking about the majority of the Indian farmers, who are uneducated, poor, 85% of whom own less than 2 hectares of land and would be far from having an equal say in the contracts designed by the profit-making strategists sitting in the air-conditioned cabins of huge corporates who will hardly think of the farmers’ well-being. Here come the exploitation and slavery again. But the farmers would still have the option of selling the crops to the government and at MSP, right? The government says they would but at the same time didn’t mention it in the ordinances. This is where the controversies begin

We all are well aware of the most common strategy that will be used by private companies to lure the farmers: initially they will offer farmers great prices and attractive contracts to stay alive in the competition. This will result in farmers going for private contracts every time, decomposing the APMC and MSP system. The government’s warehouses will become liabilities and will stop receiving investments. Once the MSP scheme becomes totally inefficient, farmers will be left with no option but to go for contract farming every time. This is when the private giants resize their pockets to fit in huge chunks of money, needless to say, by taking a part of farmers’ share. These established monopolies will jeopardize everything, farmers being exploited, fluctuating food essentials prices thanks to no prohibition on hoarding leading to the middle class’ suffering. This time the government couldn’t be relied upon, as they will have huge tax revenues rolling in already.

Till now, we have seen two scenarios. Both of them imaginary. The government keeps on promoting the first one and the opposition second. So where does the reality lies? It appears to be lying somewhere in between, that too greatly depending on how it is implemented, and how actively government participates in these procedures and considers itself responsible for the consequences at ground level.

Arising Questions

Middlemen removed thus only GST will be imposed on selling, then what about the state government revenues? It will bring the latest technologies and professional farming encouraging cash crops, but since farmers cannot own this equipment themselves, will this lead to slavery? Farmers will get better prices for their yield, but will they continue to get the same in the future?

Contract farming will assure good prices independent of market situations, but will farmers have enough power to take legal actions if required? The government guarantees to give MSP, then why not write it in the ordinance? One Nation One Market, but can farmers afford the transportation cost? Allowing to stock will help in less wastage and even distribution, but what about black marketing? If the system badly failed in the USA and Europe, how come it would succeed in India?

These are the worries that led the farmers to hop on their tractors and rally till India Gate, lodge protests, and block roads and railway tracks. But there are political reasons behind that as well, and we can’t deny that. Most of us have already lost trust both in the opposition and the ruling party as everything they do appears to be for some kind of propaganda or the party’s interest only.

Way Out/ Conclusion:

1) The government should assure to give MSP and purchase products for welfare schemes through APMC, not verbally, but by a proper ordinance.

2) Government should play an active role as a regulator and facilitator for both corporates and farmers. It would be beautiful if the private sector and government fill each other’s gaps and work hand-in-hand.

3) Arrangement of free and powerful legal assistance for farmers whenever they need it.

4) Reforms in APMC and have a check on its illegal activities instead of their complete removal.

5) Educating farmers and making them aware of both systems and how to use them. Preventing them from being exploited or misled.

The system does have the potential to revolutionize Indian agriculture. As the farmers’ situation in the current system is already deteriorating, a change certainly needs to be done. But each step needs to be taken very carefully, as we already have the USA and Europe falling prey to this.